GPT-3 & The Power of Prompts

What does the closest digital equivalent of our brains say about us?

4 min read

Mitchell Pousson II / August 12, 2020

GPT-3 has taken the tech world by storm with little to no sign of slowing down.

Those with early access to the latest language generation model have showcased just how advanced the software truly is.

Some of the more prominent examples circulating the internet include: translating natural language into intricate website code, solving complex medical questions, and even training other machine learning models.

Despite all this exemplary power, many are still skeptical of GPT-3 due to its inability to parse through "good" and "bad" information sources.

Essentially, it lacks the ability to distinguish a credible source like the New York Times from a less established medium such as a reddit forum.

Because GPT-3 is dependent on users providing the right prompt, many are left wondering what could go wrong as the product inches closer to the public market.

The surprising thing about GPT-3 is that while it can appear incredibly intelligent, and almost human-like if provided the right prompt, it often fails to surface rational responses to what we would consider basic questions or prompts.

So what do the shortcomings of the closest digital equivalent of our brains tell us about ourselves?

One could argue that our thoughts and opinions are far more prompt-dependent than we realize.

While our brains are incredibly complex and far more intelligent than any computer, the wrong prompt can make them appear worthless relative to even a basic program.

If we look at people as we do GPT-3—a reservoir containing information we want to extract—the secret lies in asking the right question while providing a structure for the response to ensure it's understandable, relevant, and useful.

A slight variation in prompt can produce a dramatically different output, and sometimes it’s important to provide multiple prompts to expand upon partial outputs.

In this way, our most valuable insights and ideas are often tied to the prompts we are given.

So how do we optimize our ability to process the right prompts in order to achieve the best results?

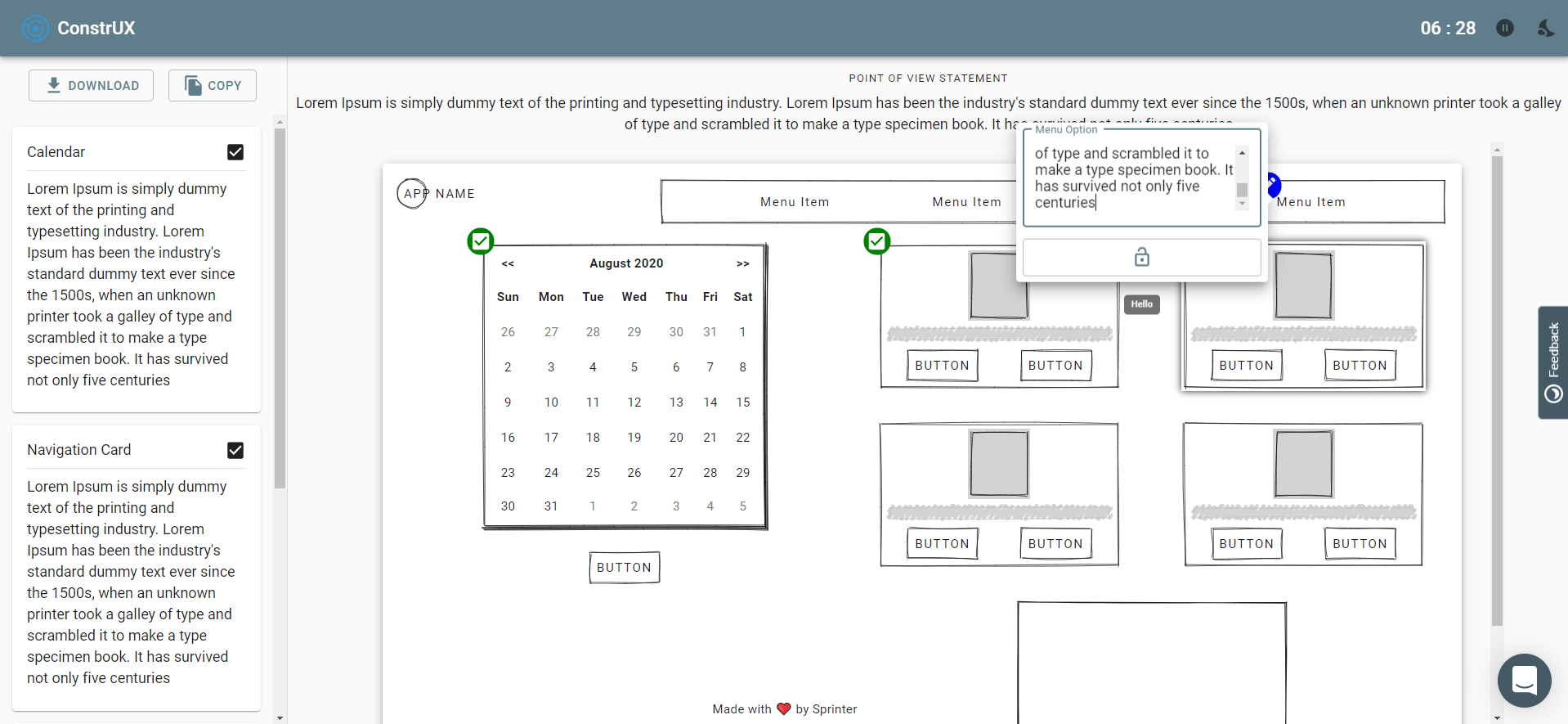

ConstrUX—the new product gathering tool we are building—offers users a plethora of various wireframes that serve as prompts for generating fresh ideas while also cultivating key insights.

The reason many requirement gathering tools fail is largely due to the inadequate prompts that they provide.

Examples of what doesn't work include: a blank whiteboard, flip chart, or sticky notes with instructions to “list what you need it to do."

Just like GPT-3 receiving an entirely too vague prompt, users often freeze at the lack of creative constraints and have no idea where to start.

Although they intuitively know the general functionality they need to solve the problem, inadequate prompts almost always fail to produce a complete picture of a solution capable of meeting the users' unspoken demands.

GPT-3 is our new product's end users and the combination of POV and wireframe is their prompt. 7 Minutes is the computation time required. And the output is the list of requirements extracted.

Mixing up these prompts to solve a single problem can produce dramatically different results with unique and powerful benefits.

Our hypothesis is that the exercise's positive results are closely tied to the users' freedom to try out new approaches from differing angles while bound to the creative constraints the prompts provide.

The same way that GPT-3 is only as valuable as the prompts it is given, our ideas are only as good as our ability to articulate them.

ConstrUX offers development teams an avenue for articulation that was before deduced down to verbal back & forth banter and illegible whiteboard drawings.

We've been very pleased with the results thus far and just like the makers of GPT-3—look forward to bringing it to market.

This is how we help ordinary people build extraordinary products.